DALL·E 2 – a new tool powered by AI is creating images that wow the internet

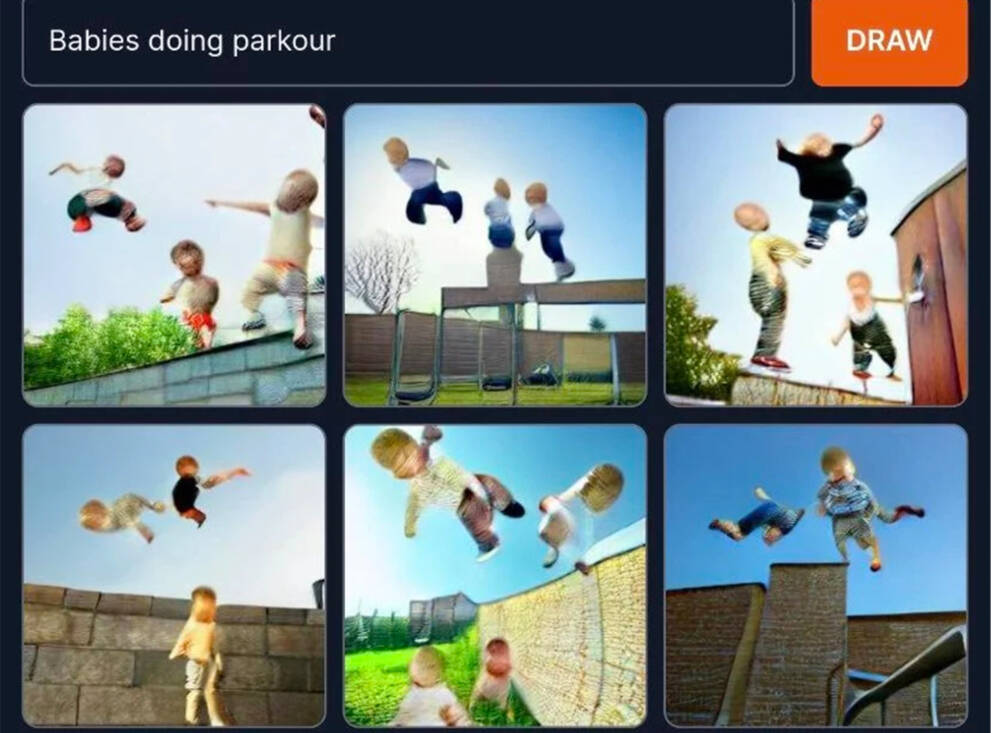

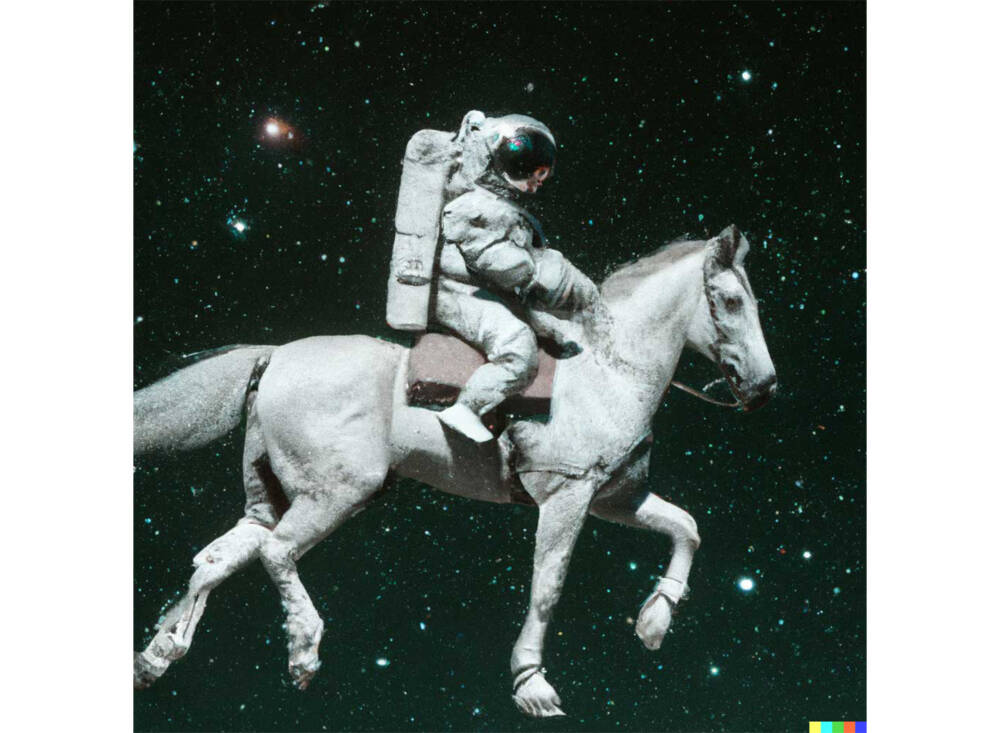

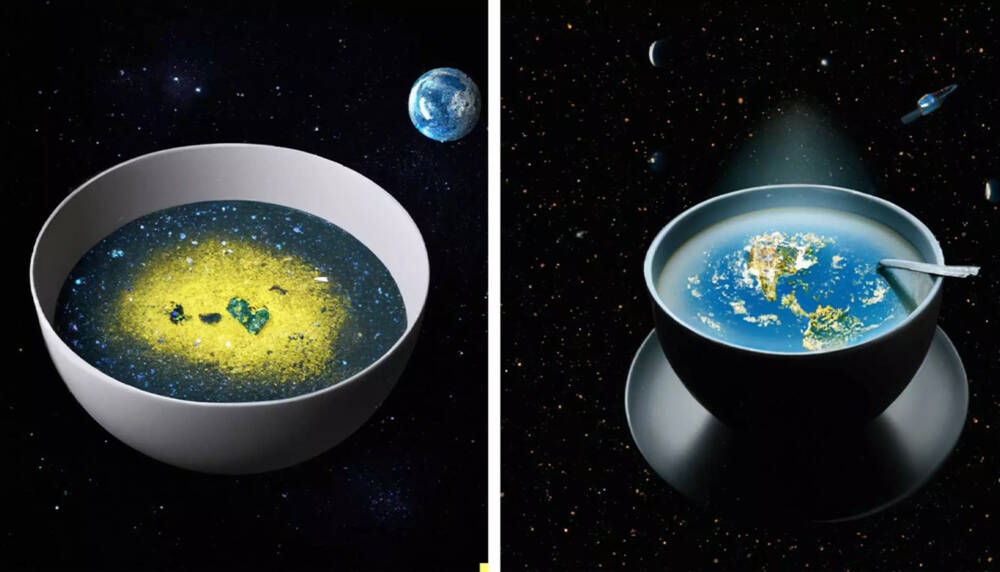

Have you ever seen a donkey eating a smoothie with a paddle or babies doing parkour? DALL·E 2 by Open AI is the new advanced artificial intelligence system for creating images, which exposes the darkest recesses of your imagination. It takes simple text description like “cookie monster death metal album cover” and turn them into photo-realistic images that have never existed before. So is manipulating visual concepts through language now within reach? Let’s sort it out.

Who are Open AI?

OpenAI is an artificial intelligence research and deployment company, whose mission is to ensure that artificial general intelligence (AGI) benefits all of humanity and serves safe and beneficial purposes. OpenAI was founded in 2015 by Elon Musk, who left the board three years later, and Sam Altman, a protégé of Peter Thiel, who was an early investor. It is financially backed by Microsoft and competing in the race to develop the best AI technology against Google, Amazon and Facebook. They are all also building AI tools using similar systems. Their first-of-its-kind API can be applied to any language task, and serves millions of production requests each day. In January 2021, OpenAI introduced DALL·E.

DALL·E what?

DALL-E is an AI system that creates images based on descriptions and can generate images from any prompt you give. After the presentation of DALL·E in January 2021, one year later, their newest system, DALL·E 2 was launched. It now generates more realistic and accurate images with 4x greater resolution. Previously DALL·E has been used only by an experienced group of testers — mostly researchers, academics, journalists and artists, but soon OpenAI announced it would invite more people to the party. The company lets in up to 1 million people from its recently started waitlist, as it moves from its research phase into its beta stage.

The excitement also triggered free imitation versions like DALL-E mini. Its far less impressive low-quality renderings helped to turn AI image generation into a hobby for some. Recently, DALL·E mini changed its name to Craiyon to avoid confusion. It is not affiliated with OpenAI.

Help! I need to play with DALL·E

Using DALL·E is super simple: you type what you want to see, then seconds later a panel of four images appears and brings to life all your cheesy imaginaries. The possibilities seem endless: you can ask for images that look like photographs or the work of painters; some 3D renderings or photos conveying cyberpunk or any aesthetic. So basically the program takes a text phrase and creates an image out of it. It can combine concepts, attributes, and styles. DALL·E will not produce anything if a description of an image violates its content rules. Instead, it will warn users that their accounts could be suspended if they repeatedly try to break the system’s rules.

So how does it actually work?

DALL·E’s algorithm is trained on the thousands of images it has ingested and text captions associated with the images, and it makes rapid-fire associations. The art it creates is not a mishmash of many images. Rather, it is a unique image based on a sophisticated AI model known as a “neural network,” because it makes connections in ways that mimic the human brain.

The first version relied on the GPT-3 language model, also from OpenAI. While the latter draws its basic knowledge from a large collection of texts, OpenAI has trained DALL·E and its successor with numerous images and associated descriptions. Its successor, DALL·E 2 combines two techniques that OpenAI has developed since the release of the first variant: CLIP (Contrastive Language-Image Pre-training), an artificial neural network that translates visual concepts into categories, and GLIDE (Guided Language to Image Diffusion for Generation and Editing), a text-guided diffusion model that outperformed DALL·E, particularly in the areas of photorealism and matching description, according to a paper.

The program has learned the relationship between images and the text used to describe them. It uses a process called “diffusion,” which starts with a pattern of random dots and gradually alters that pattern towards an image when it recognizes specific aspects of that image.

Okay, what’s on the downside then?

OpenAI hopes that DALL·E 2 will empower people to express themselves creatively. It also helps us understand how advanced AI systems see and understand our world, which is critical to the mission of creating AI that benefits humanity.

However, plenty of bad actors use the powerful tool to spread disinformation, and OpenAI has kept DALL·E closely guarded. Imagine someone trying to use it to fabricate images of the war in Ukraine, or create realistic images of natural disasters that never occurred, so be aware of risks and limitations.

Joanne Jang, the product manager of DALL·E, says the company is still refining its content rules, which now prohibit what you might expect: making violent, pornographic and hateful content. It also bans images depicting ballot boxes and protests, or any image that “may be used to influence the political process or to campaign.” DALL·E also bans depictions of real people, and it anticipates establishing more guardrails as its researchers learn how users interact with the system.

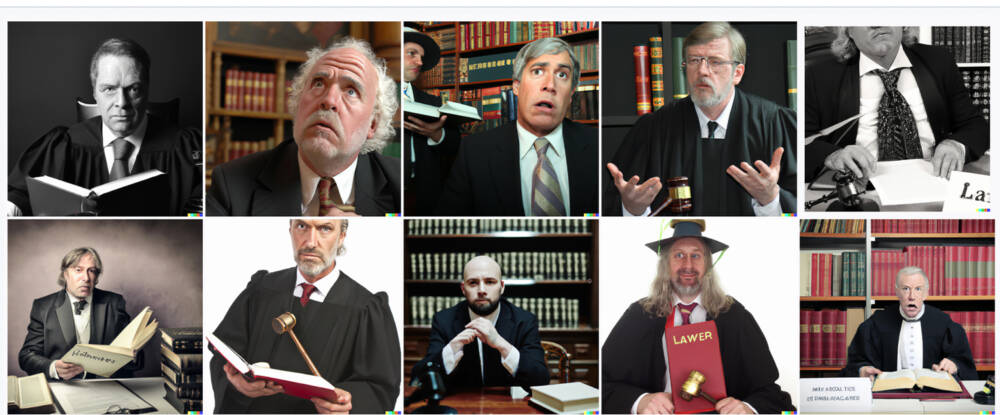

DALL·E 2 also tends to serve completions that suggest stereotypes, including race and gender stereotypes. For example, the prompt “lawyer” results disproportionately in images of people who are White-passing and male-passing in Western dress, while the prompt “nurse” tends to result in images of people who are female-passing.

Credits: Images generated by AI/DALL·E2 /OpenAI